HPC & AI Market Update

David Baldwin

At the Super Compute (USA) and International Super Compute (Germany) conferences, Hyperion Research provides an excellent 6-monthly update on the state of the market for the High Performance Computing (HPC) & Artificial Intelligence (AI) market and the trends we can expect to see over the next few years.

The full briefing pack can be found here: Hyperion Research HPC and AI Market Update SC24

For the Research Community at Leeds, what impacts and opportunities can we expect to see over the next year or so?

HPC & AI Market

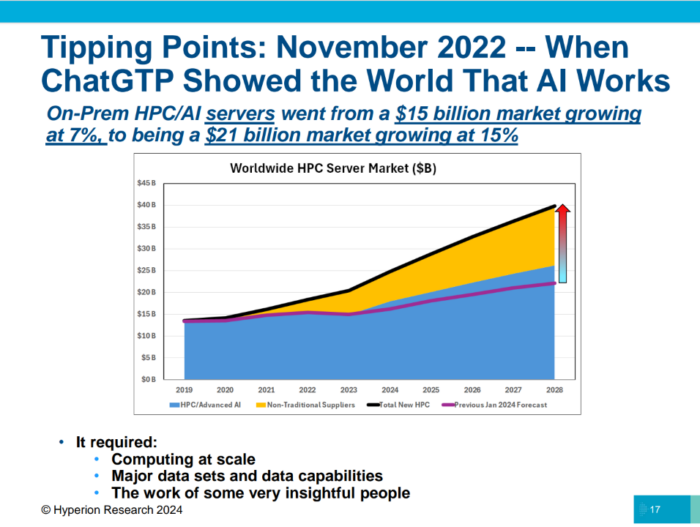

Hyperion talk of tipping points (pg 13 - 17), which are rare but transformational and they believe that ChatGPT (i.e. AI has been demonstrated to work) was one of those and we are beyond the hype of AI and into a sustained and therefore different market place, and their chart on pg. 17 reflects this.

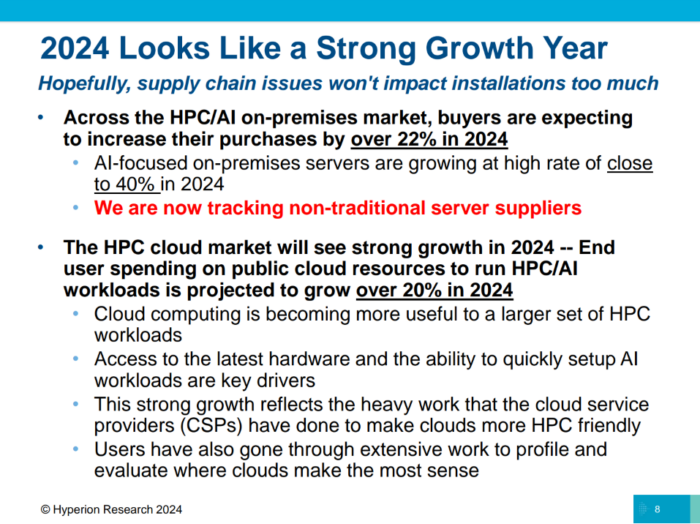

A larger market tends to mean more diversity of technologies and vendors which drives lower costs and better choices for our workloads. Balanced against this might be some more challenges in the supply chains, although that has improved, and they quote a 40% growth in the AI focused market, which might mean more traditional HPC and scientific workloads get a little left out as vendors chase the AI bubble. In general, a healthy market helps us, and we may even see some players over-stocking and offering discounts on some SKUs (stock keeping unit - the building blocks for a HPC cluster), which we might be able to take advantage of.

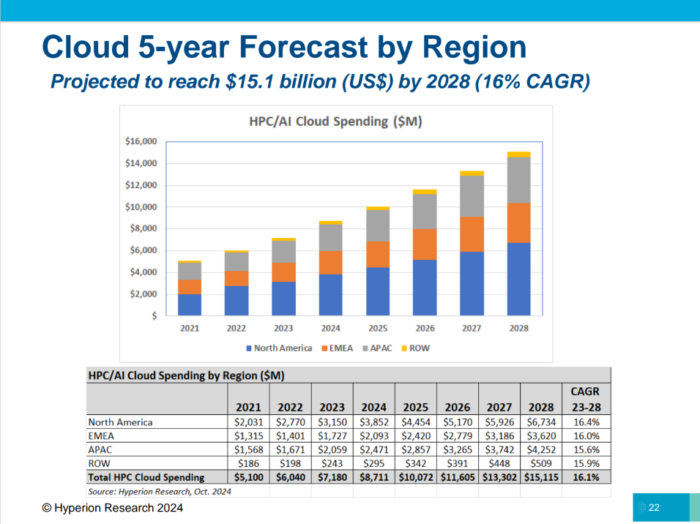

Cloud

The cloud market continues to grow and there has been a concerted effort by the cloud service providers (CPS) to listen to the problems HPC users have previously had running their workloads and to make their offerings more applicable to us. In my mind Azure do this better than AWS, but it is an encouraging development that all the CPS are focusing on HPC. For Leeds, on-premise HPC is cheaper than the cloud, but that doesn't mean the cloud doesn't have a place or that we don't use it. CPSs often have newer and different technology (latest GPUs for instance), a broader software stack, great training and migration tools and, of course, the ability to turn it off when you don't need it, good for very spikey workloads, so it remains a tool in our toolbox.

Exascale Systems

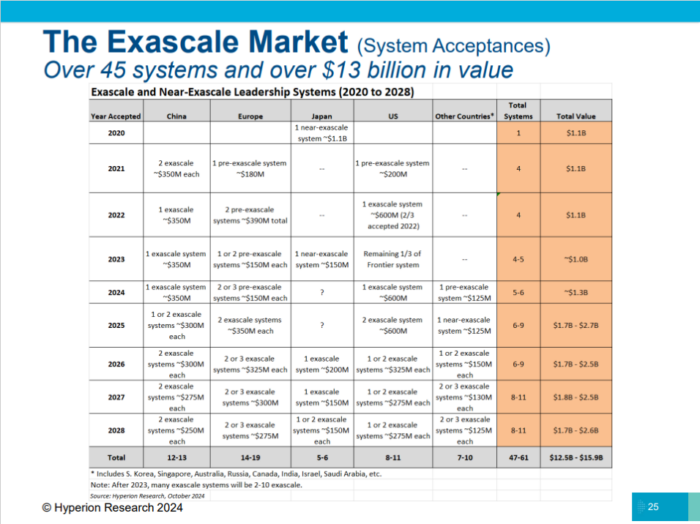

I'll won't dwell overly on the exa-scale systems (pg25) - the very largest in the world, 100x larger than our new Aire system (and 100x more expensive!) as we will never build one. However, it is worth keeping an eye on who is building systems, as what is extraordinary now, is routine in 5-7 years in HPC. Serious money and national pride are tied up in these systems and the announcement of EPCC (University of Edinburgh) to have its funding cut for the UK's exa-scale system was disappointing. The US and China continue to lead the exascale charge, with Europe catching up in the few years. The UK has no published plans yet to replace the cancelled system in Edinburgh.

Looking a little further out - what about quantum computing (QC)?

Quantum Computing

There is significant investment in QC (pg 49, $1B), and Hyperion are expecting growth at a healthy rate. There is also plenty of innovation and progress in the space, for instance Google recent announcement on their Willow system on 'solving' the error correction problem, which has been a serious roadblock to progress on QC. There are still plenty of issues to fix (pg. 54), especially around standards, budgets & procurement and, of course, training staff. Leeds is not actively pursuing any QC hardware and I wouldn't expect for us to. In the first instance, people might be interested in simulators running on classic computers to prove the concept, and then will look to run their code on real hardware. Given the difficulty (and expense) of building a quantum computer, we would look to use shared resources, either from another Higher Education Institution as and when they build them, or more likely a specialist cloud provider. If you are already thinking about this, let us know so we can work with you on planning the next steps.

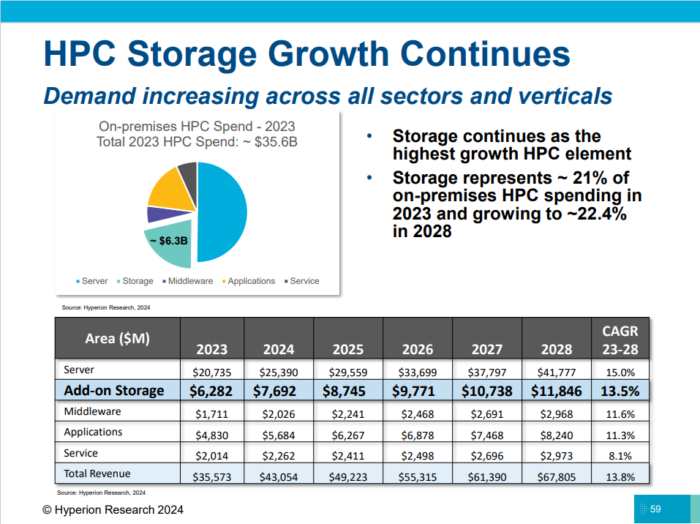

Storage

Storage continues to be a large and growing part of any Advanced Research Computing (ARC) or HPC budget and shows no signs of slowing down. Hyperion quote 1/3 of the budget going to storage, split equally between persistent and ephemeral storage (for Leeds this means Isilon and Lustre respectively) and this is approximately what we have experienced. The implication being that fast/high throughput storage (normally attached to the compute nodes with a high-speed network) are becoming more important for the workloads. In the filesystem space, NFS and Lustre continue to dominate, but watch out for new comers such as WekaIO starting to make inroads into Spectrum Scale (GPFS) & Lustre's stranglehold of the parallel filesystem market. Moving the bits to the flops has always been a challenge and this shift to more and faster ephemeral storage is an indication that there are better storage options and more compute, i.e. cores available to us. Which brings us on to networks (pg. 65).

Networks

There is a split in the market about how you build your high-speed, low latency network. Is it for moving data from storage to the compute or for node to node traffic (e.g. MPI) or both? Some people build separate networks for the two use cases, or like Leeds, we just build one. Our choice is driven by cost primarily but also to some extent on the relatively low number of jobs we run that span many nodes, but for large models, having the traffic on different networks can be important. On the choice of networks, InfiniBand is dominant, followed by Ethernet and in a distant third is Omni-Path. I don't see this changing in the future very much; NVIDIA's purchase of InfiniBand (Mellanox) in 2019 means it will be the de facto choice for many people, especially in AI, as NVIDIA have sorted out many of the supply chain issues that plagued them over the last few years.

Conclusions

There is a healthy and diverse HPC/AI market place and this is strengthening year on year, driven in part by the cloud providers building more HPC capacity which in turn is driven by AI workloads. The on-premise market is still strong and well-funded and all this is good news for Leeds. A healthy market place drives innovation forward and costs down, which enables us to procure more and better storage, compute and network capabilities and hence produce great research.

Author

David Baldwin

Director of Digital Research