Ready, Set, Compute: Aire at Work

Patricia Ternes, David Baldwin, Maeve Murphy Quinlan, Sorrel Harriet

Since its official launch in February 2025, our latest on-site High-Performance Computing (HPC) cluster, Aire, has been steadily gaining traction across the research community at Leeds. Now, five months in, we’re seeing clear signs that the system is not only meeting expectations—it’s becoming a key part of researchers’ workflows.

In this blog post, we’re sharing data from the last three months (April to June)—a period that highlights just how much momentum Aire has built. Across compute, storage, and user engagement, the growth has been remarkable.

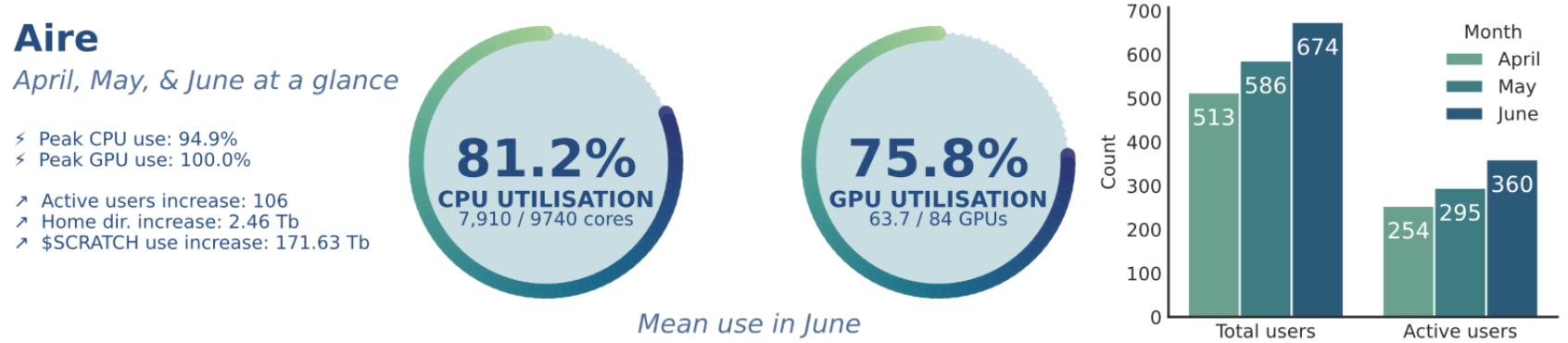

Figure 1: Overview of Aire statistics for April, May, and June, including peak and mean CPU and GPU utilisation, numbers of users, and use of the storage spaces on the system.

🚀 High Demand and Rising Usage

Aire has seen steady and consistent growth in resource utilisation over the past three months, and by June it was handling workloads at a scale that already exceeds the peak usage we saw on its predecessor, ARC4. This is a strong indication that researchers are actively relying on Aire for their core workloads—and that the system is performing well under load.

CPU and GPU Utilisation

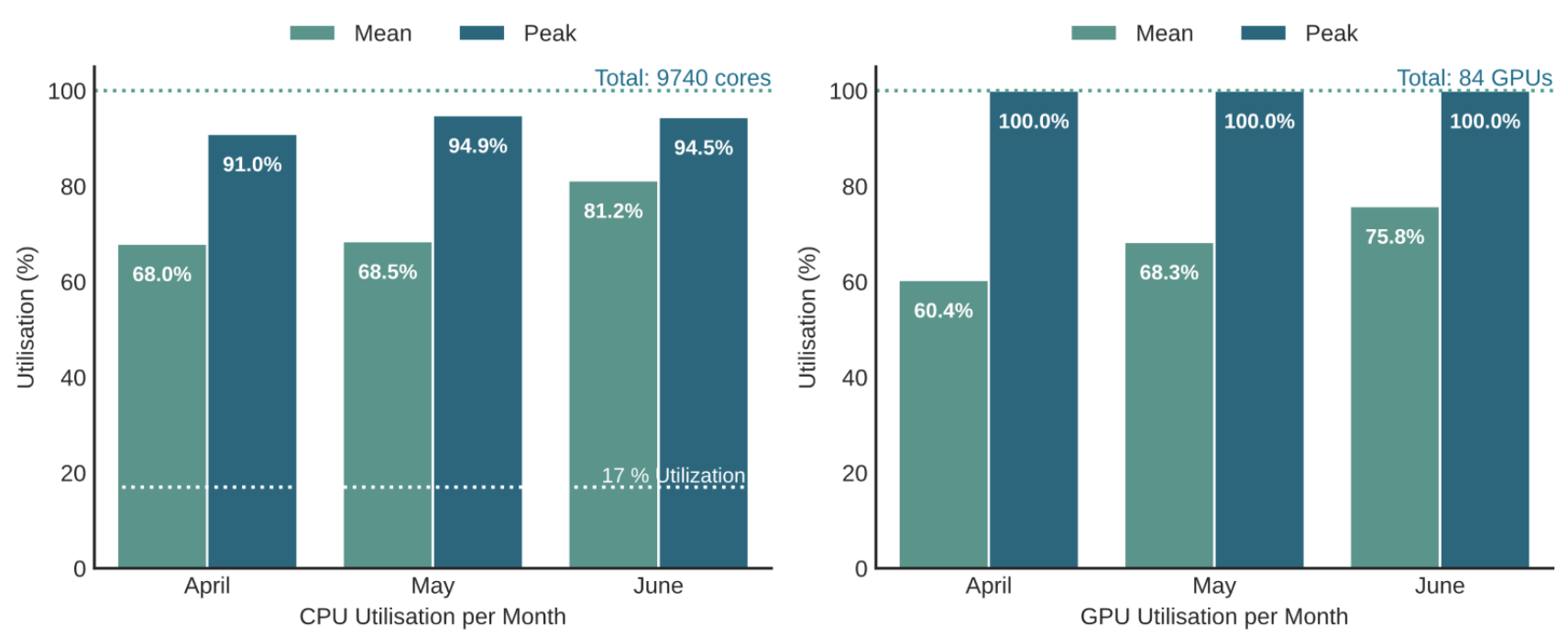

Between April and June, average CPU usage rose from 6,620 cores to 7,910 cores, which represents 81% of Aire’s total available compute capacity (9,744 cores).

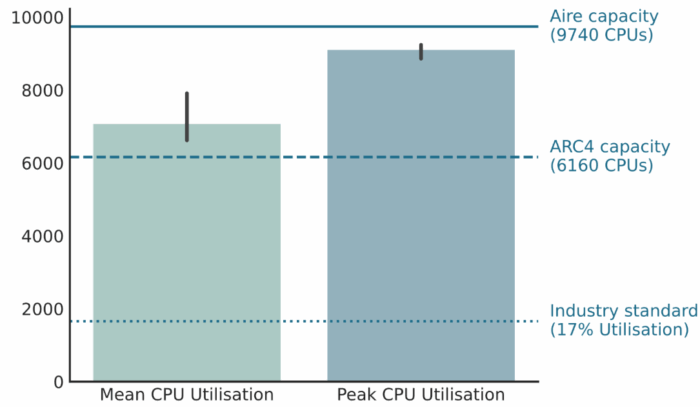

To put this in context, recent industry-wide data shows that large-scale compute environments typically see average CPU utilisation of around 17% (source). At 81%, Aire is already operating at an unusually high level of efficiency—especially considering the diversity of job types and the early stage of the system.

Figure 2: Percentage utilisation of both CPU and GPU resources per month. The total cores available are also shown, as is the industry standard of 17% utilisation of HPC systems.

Peak CPU usage has consistently exceeded 9,000 cores since May. For comparison, our previous HPC system, ARC4, had 6,160 general-use cores, meaning Aire is already exceeding ARC4’s capacity on a regular basis.

Figure 3: Aire CPU utilisation (both mean usage and peak usage; both averaged over April, May, and June) in comparison to ARC4 general CPU capacity, and industry standards for HPC efficiency.

On the GPU side, all 84 NVIDIA L40S GPUs have been in use at peak across all three months, and average GPU usage is steadily rising—from 50.7 GPUs in April to 63.7 GPUs in June, which represents 5.3 times the GPU usage of ARC4.

Table 1: CPU and GPU utilisation on Aire per month, both average (mean) and maximum (peak) use

Storage and Memory Growth: Data-Driven Research in Action

With more users comes more data—and Aire is keeping up:

- Home directory usage grew from 3.48 TB (April) to 5.94 TB (June).

- Scratch space (Lustre filesystem) nearly doubled in one month: from 88.57 TB (May) to 171.63 TB (June).

- Memory usage followed a similar path: average memory used climbed from 18.1 TB to 26.5 TB, with peak usage reaching 42.1 TB.

Figure 4: Storage growth on Aire, both on the Scratch partition (Lustre file system) and in home directories. Data for Scratch storage in April is not available.

These numbers reflect not only increasing workloads, but also the system’s ability to support data-intensive research at scale. The upcoming rollout of Globus—a data management and transfer platform that allows researchers to move large datasets between different systems—is expected to further increase demand for memory and storage capacity in the coming months.

Slurm in Numbers: Mature Workloads, Fewer Failures

Over the past two months, Aire has handled an impressive and growing volume of jobs submitted by researchers across the University. In May the system successfully completed over 136,000 jobs, and, by June, that number had climbed to more than 177,000—a strong indicator of both rising adoption and trust in the system.

Notably, the number of failed or cancelled jobs across both months represented less than 1% of the total volume, highlighting Aire’s robustness. This low failure rate reflects a system that is not only actively used but also stable and dependable under load—an essential quality for researchers who rely on consistent turnaround for their computational tasks.

As usage continues to grow and the diversity of workloads increases, we’re beginning to see signs of queue pressure. Some users have reported that specific workloads are taking longer to start or are sitting in the queue for extended periods. This is a natural development in any busy HPC environment, especially as job types and demands vary more widely.

To keep pace with growing demand, we're making routine adjustments to SLURM’s scheduling configuration—an expected and ongoing part of operating a high-demand HPC service. One current focus is improving how job prioritisation is calculated, especially for larger workloads. At present the system tends to favour many small jobs which can unintentionally delay the start of larger, resource-intensive tasks. We’re working to strike a better balance so that users with a wide variety of job types see consistent and fair queue behaviour as Aire continues to scale.

User Base: Rapid Onboarding and a Thriving Community

Aire’s adoption has been swift, thanks in large part to a streamlined onboarding process (request an account here) and dedicated support:

- Total users grew by 31% in three months: from 513 (April) to 674 (June).

- Active users (past 28 days) rose from 254 to 360, a clear sign of day-to-day engagement.

Figure 5: Total system users, as well as "active users" who have used the system in the past 28 days (approximately monthly active users). Percentage increase is also shown.

Our team in Research IT worked hard to ensure a smooth transition from older systems like ARC4. Beyond creating a brand-new account workflow, we’ve delivered tailored support through individual and group sessions, updated documentation, and Aire-focused training courses—available both live and as self-paced resources. We’ve also streamlined access by integrating with the university’s gateway system and created a user mailing list to improve communication (ask to join our mailing list here).

The result? A user base that’s not just growing—it’s actively using the system and contributing to its success.

Moving Forward — Strong Foundations, Steady Progress

Aire has already proven itself as a capable and reliable system, running close to full capacity whilst supporting hundreds of researchers and a rapidly growing volume of work. In just a few months, we've not only surpassed the usage levels of our previous system, ARC4, but also introduced key new features like project storage quotas and laid the groundwork for Globus integration.

This progress is only possible thanks to the tireless effort of the Research IT team, who continue to onboard users, tune system configurations, and deliver responsive support — all while managing a complex portfolio of services across the University.

While there’s still work ahead, especially around optimising scheduling and expanding application support, the foundation is solid. Aire is evolving fast — and most importantly, it's enabling real research to happen every day.

Authors

Patricia Ternes

Research Software Engineer Manager

David Baldwin

Director of Digital Research

Maeve Murphy Quinlan

Research Software Engineer

Sorrel Harriet

Research Software Engineer